I work a lot with systems that talk to other systems. Technically, this puts them in the class of distributed systems, but unlike databases or something similarly complex, it's usually some kind of PC (laptop, webserver), talking to some kind of embedded system (a USB device, a sensor).

Regardless of complexity, these systems need to talk to each other to achieve some goal: obtaining sensor data, changing configuration values, etc. For many simple cases like these, we can think of these systems as "phase locked" - they are two systems that move state-to-state at the same time.

This post explores a technique I've found, and that I think is useful (and fun?) for specifying protocols in Rust. Prefer to jump straight to the code? It's here on github.

A useful example

My go-to example of a "two system problem" is a bootloader:

- The "host" (a PC) holds the whole new firmware

- The "client" (an embedded system) doesn't have room to hold the whole new firmware in memory

- The host must send the firmware in pieces, to allow the client to handle it

- The client will need to erase the old firmware in relatively large blocks, say 4KiB at a time

- The host will need to send pieces of the new firmware in relatively small blocks, say 256 bytes at a time

- This process needs to repeat until the full image has been transferred

- The host finally says "good luck, boot into your new firmware"

This is harder to write than you might think

Writing code for this kind of problem usually means developing three separate components:

- A state machine for the client - code to walk through all the steps above

- A state machine for the host - usually pushing the client through each step

- A "wire protocol", for the actual kinds of message and the sequence of messages sent to achieve all the state transitions

This means I write three chunks of software in three places, and if I change any one part, I need to make sure the other two are consistent.

Yes, I know I'm writing three things. But that is frustrating, because I'm not writing three things: I'm writing one thing that exists in three places:

- On the client

- On the host

- Over the wire

I run into problems that are roughly this shape, but I haven't been able to find a good way of specifying directly in code. Often I end up drawing the sequence up on paper.

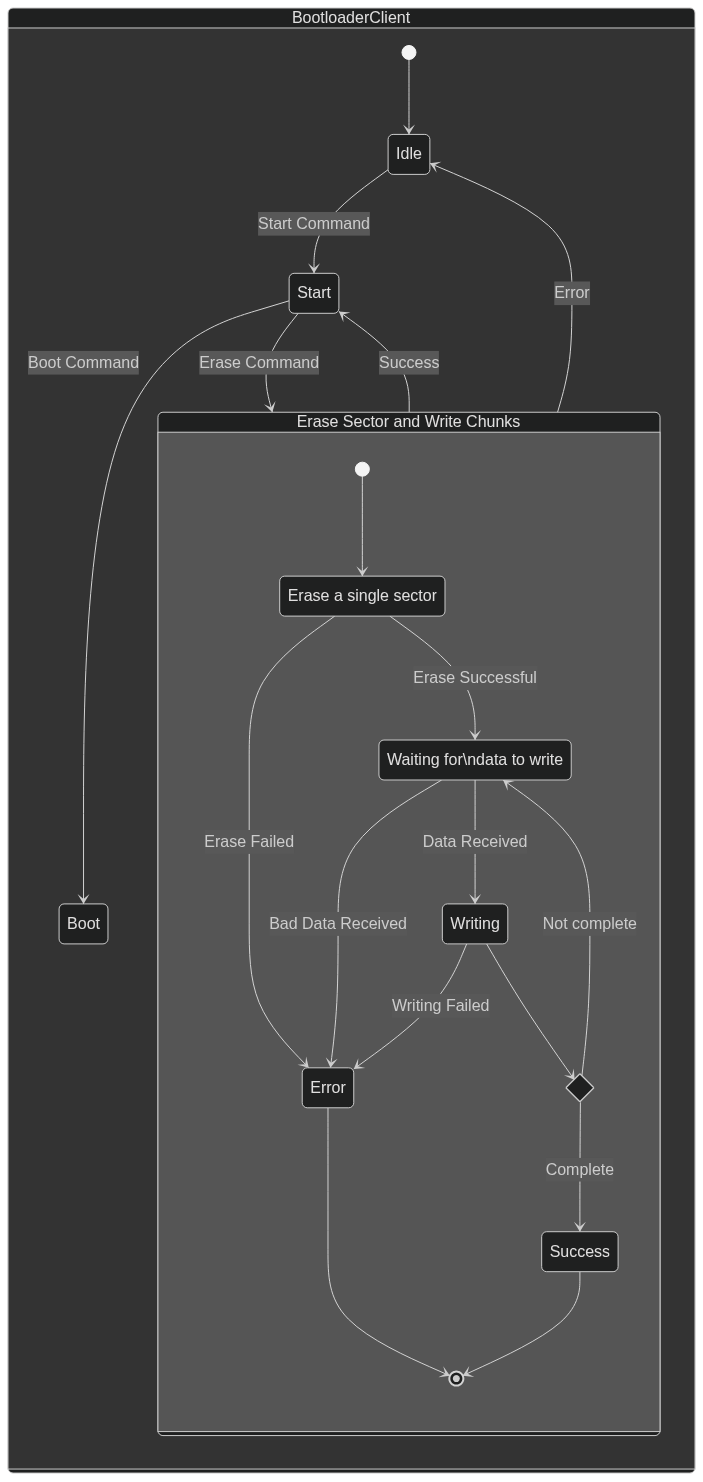

For state machines, this means drawing state machine diagrams, one for the client and one for the host. A client diagram could look like this:

For writing down the sequence of messages, this means drawing sequence diagrams, and making sure it is consistent with the state machines. The sequence diagram could look like this:

But I don't love this: docs don't always live with the code, and I am usually iterating pretty quickly when I am designing things off the cuff. Ideally, I'd like to be able to lean on the compiler to help make sure any changes I make between the three parts end up being consistent across the entire design.

Let's switch to looking at code

Let me make some assertions:

- All programs are state machines.

- If I write some code, it's a valid way of specifying a state machine

- Writing code is just as valid of a specification as a diagram

- Async programs are also state machines.

- We already said code is a state machine

- Async code also abstracts away the "wait for an event to occur" part of a state machine

- Traits (in Rust) are a way of separating "abstract behavior" from "concrete implementation".

- We specify what we want to happen

- We don't specify how we want that to happen

So how about some async rust code that describes our bootloader state machine?

async fn bootload(something: &mut Something) -> Result<(), ()> {

// start the bootloading, and get the image size

let image_end = something.start().await?;

// While there are more sectors to erase/fill...

while let Some(start) = something.next_sector() {

// ...erase the sector...

something.erase_sector(start, SECTOR_SIZE).await?;

let sector_end = start + SECTOR_SIZE;

let mut now = start;

// ...while there is more data to write in this sector...

while (now < sector_end) && (now < image_end) {

// ... write the chunk to disk

now += something.write_next_chunk().await?;

}

}

// All done, now boot.

something.boot().await?;

Ok(())

}

That seems like a decent representation of the system I described above.

Let's look at this one chunk at a time:

let image_end = something.start().await?;

We start the bootloading.

- The host (the PC) should send a message to the client (the embedded system) to start the bootloading. It should send the total size of the image we want to send. It should then wait for an acknowledgement.

- The client receives the message, and knows that the client would like to start bootloading. It receives the total size of the image the host wants to send. The client should also send an acknowledgement to say "yes go ahead thank you"

while let Some(start) = something.next_sector() { /* ... */ }

We iterate over the number of sectors. Both the host and client will know how many sectors need to be written.

// ...erase the sector...

something.erase_sector(start, SECTOR_SIZE).await?;

We need to erase a given sector.

- The host should send a message to prompt the erasing of a section, and wait for an ACK

- The client should erase the given sector, and send a message back once the erasing has completed.

let sector_end = start + SECTOR_SIZE;

let mut now = start;

// ...while there is more data to write in this sector...

while (now < sector_end) && (now < image_end) {

// ... write the chunk to disk

now += something.write_next_chunk().await?;

}

We need to send manageable chunks of the new firmware image, until we have either filled this now-empty sector, or until we have finished transferring the whole firmware image.

- The host should send one chunk at a time, waiting for an acknowledgement before proceeding.

- The client should write the chunk of data, confirming once the write is complete

// All done, now boot.

something.boot().await?;

Once we are done transferring, we should prompt the client to boot into its new firmware.

- The host should send a "okay all done now boot" message, and wait for confirmation

- The client should say "okay here I go byyyeeeeee"

Okay that's cool but what?

Note that there are no mentions of "client" or "host" in the code above, yet it still describes a state machine that is suitable for both the host and client.

I could copy and paste this code to both projects, or I could instead somehow make this generic over whether this state machine is running on a host, or running on a client.

Like I said before, this is what traits are for. Let's turn each of the function calls above into trait methods instead. We can think of each of these functions as a state transition:

trait TraitMachine {

const SECTOR_SIZE: usize;

const CHUNK_SIZE: usize;

fn next_sector(&mut self) -> Option<usize>;

// These are all the joint state transitions, basically?

async fn start(&mut self) -> Result<usize, ()>;

async fn erase_sector(&mut self, start: usize, len: usize) -> Result<(), ()>;

async fn write_next_chunk(&mut self) -> Result<usize, ()>;

async fn boot(&mut self) -> Result<(), ()>;

async fn abort(&mut self) -> Result<(), ()>;

}

Now we can make our async function generic over that trait:

async fn bootload<TM: TraitMachine>(tm: &mut TM) -> Result<(), ()> {

// start the bootloading, and get the image size

let image_end = tm.start().await?;

// While there are more sectors to erase/fill...

while let Some(start) = tm.next_sector() {

// ...erase the sector...

tm.erase_sector(start, TM::SECTOR_SIZE).await?;

let sector_end = start + TM::SECTOR_SIZE;

let mut now = start;

// ...while there is more data to write in this sector...

while (now < sector_end) && (now < image_end) {

now += tm.write_next_chunk().await?;

}

}

// All done, now boot.

tm.boot().await?;

Ok(())

}

Implementing the steps as separate pieces

But what does it look like to specify separate behavior for each entity?

On the Host

Let's look at the start method on the host:

impl TraitMachine for Host {

// ...

async fn start(&mut self) -> Result<usize, ()> {

self.channel

.send(Host2Client::Start {

total_size: self.image.len(),

})

.await?;

match self.channel.recv().await? {

Client2Host::Starting => Ok(self.image.len()),

_ => Err(()),

}

}

// ...

We:

- Send a "start" message to the client, with the total image size

- We wait for a "starting" acknowledgement message from the client

On the Client

Now let's look at the start method on the client:

impl TraitMachine for Client {

// ...

async fn start(&mut self) -> Result<usize, ()> {

match self.channel.recv().await? {

Host2Client::Start { total_size } if total_size <= Self::TOTAL_SIZE => {

self.image_len = Some(total_size);

self.channel.send(Client2Host::Starting).await?;

Ok(total_size)

}

_ => Err(()),

}

}

// ...

}

We:

- Wait for a "Start" message from the host, with the total image size

- We send a "starting" acknowledgement to the host

Mission Accomplished?

Okay, but is this actually "better" than other techniques?

I dunno. I haven't seen anyone do it like this before, and it seems neat that I can use out-of-the-box tools of Rust to achieve this.

The whole demo is here, and you can run it for yourself to see how it works.

I don't think this could be a "library", but more like a "pattern", similar to how things like xtask work.

Things this pattern does well

There are a couple of things that I think this pattern definitely helps with:

- It distills the "state transitions" into two main independent parts

- One async function, that describes the "state machine"

- A trait that provides a listing of all the "state transitions"

- It doesn't specify how you communicate between two systems

- It could be "in-memory", over channels, like are done in the demo code

- It could be "over the wire", using Serde to serialize and deserialize messages on each side

- This makes it easy to test exhaustively, using tools like fuzzers or property based testing

- It's just "normal rust code"

- We don't do any tricky code generation with external tools or procedural macros

- We don't use any tricky type system tools, like type state or session types

- Ideally this makes the code easier to read, and for a human to review for correctness

- We are not limited to certain capabilities that a DSL or other tool might not be able to express

- This pattern plays very well with erlang-style "fail early" protocols

- It is very easy to wrap the whole state machine function with an "on error, send NAK" step

- This means that all error handling can just use the

?operator, which implicitly "resets" the state machine

- Subjectively, I find it fun to write the code this way, and it breaks the problem into reasonable pieces

- Writing the async function first makes me really think about what the system does as a unit

- Every trait method I add ends up being another transfer of messages, which makes the protocol "explicit"

Things this pattern doesn't address

There are definitely some drawbacks to this pattern:

- It's very possible some constructs could be awkward to specify using this pattern

- I've only really tried it with a couple problems so far

- Not every communication protocol falls into the "phase locked" category

- This pattern doesn't help you "formally" (or pseudo-formally) specify the wire protocol

- You COULD write client and host trait impls that don't "mesh" with each other

- By allowing "any rust code", it becomes just as complex to test or verify as any other rust code

- This sort of formal specification is what session types or other DSLs aim to aid with

- It still requires a human to look at both steps to ensure consistency, but ideally makes those steps easy to look at "side by side".

- It doesn't help with things like "protocol versioning"

- For systems where you have two separate programs for the client and server, they could fall out of sync

- You still need some kind of "wire format" that lets the client and server ensure they are consistent

- It works best with async traits, which aren't stable yet

- Today you still need the

#![feature(async_fn_in_trait)]feature and a nightly compiler - You could write it without this feature, or without async, but I think it would be less pleasant

- Hopefully, this should all be stable by the end of 2023

- Today you still need the

Wrapping up

Should you use this? I don't know! Maybe try it out, and let me know how it goes!

I think it is neat, and is maybe the first step towards a more general, or more useful, solution.

Need help with building something in Rust? Maybe I can help!.